GitHub - LightDXY/FT-CLIP: CLIP Itself is a Strong Fine-tuner: Achieving 85.7% and 88.0% Top-1 Accuracy with ViT-B and ViT-L on ImageNet

![PDF] Enabling Multimodal Generation on CLIP via Vision-Language Knowledge Distillation | Semantic Scholar PDF] Enabling Multimodal Generation on CLIP via Vision-Language Knowledge Distillation | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/7f71875f8214dffa4f3276da123c4990a6d437cc/8-Table2-1.png)

PDF] Enabling Multimodal Generation on CLIP via Vision-Language Knowledge Distillation | Semantic Scholar

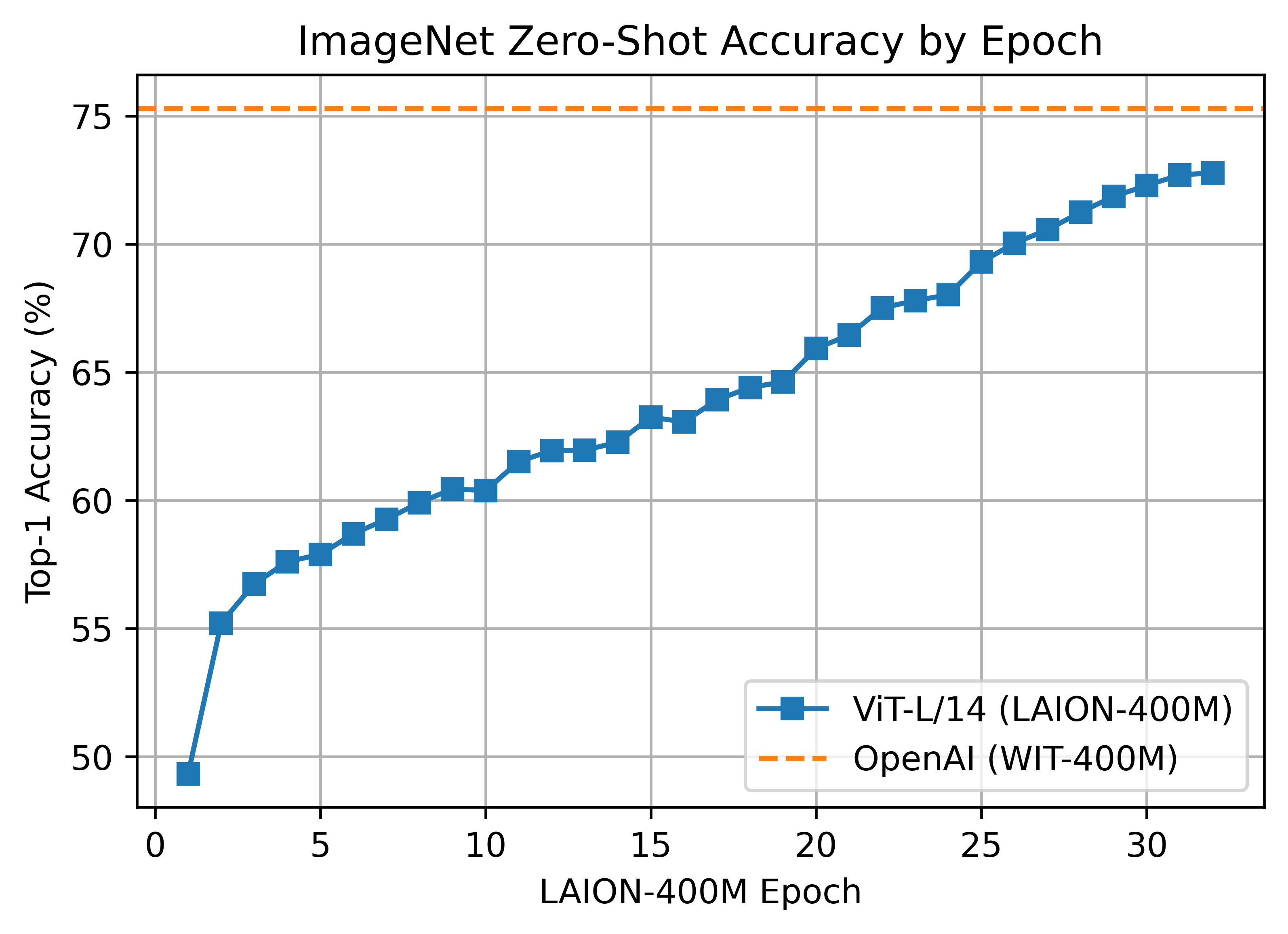

pharmapsychotic on Twitter: "#stablediffusion2 uses the OpenCLIP ViT-H model trained on the LAION dataset so it knows different things than the OpenAI ViT-L we're all used to prompting. To help out with

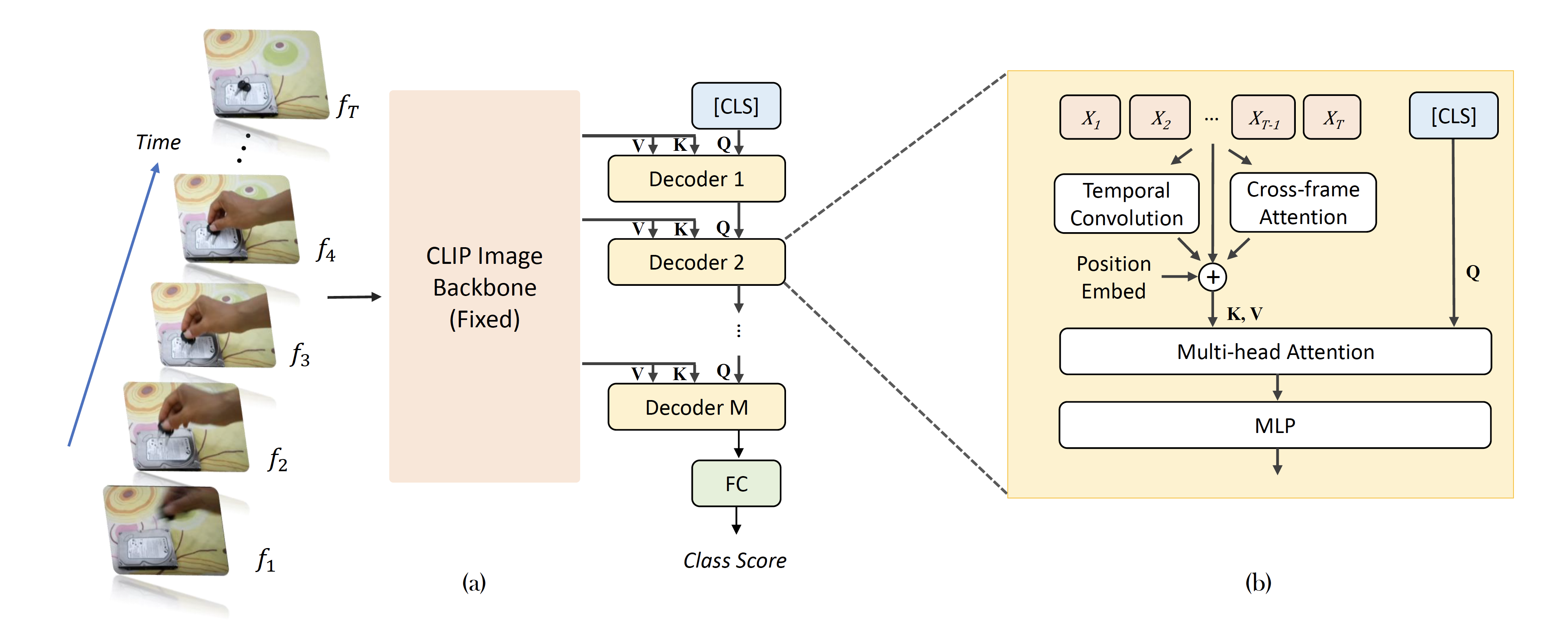

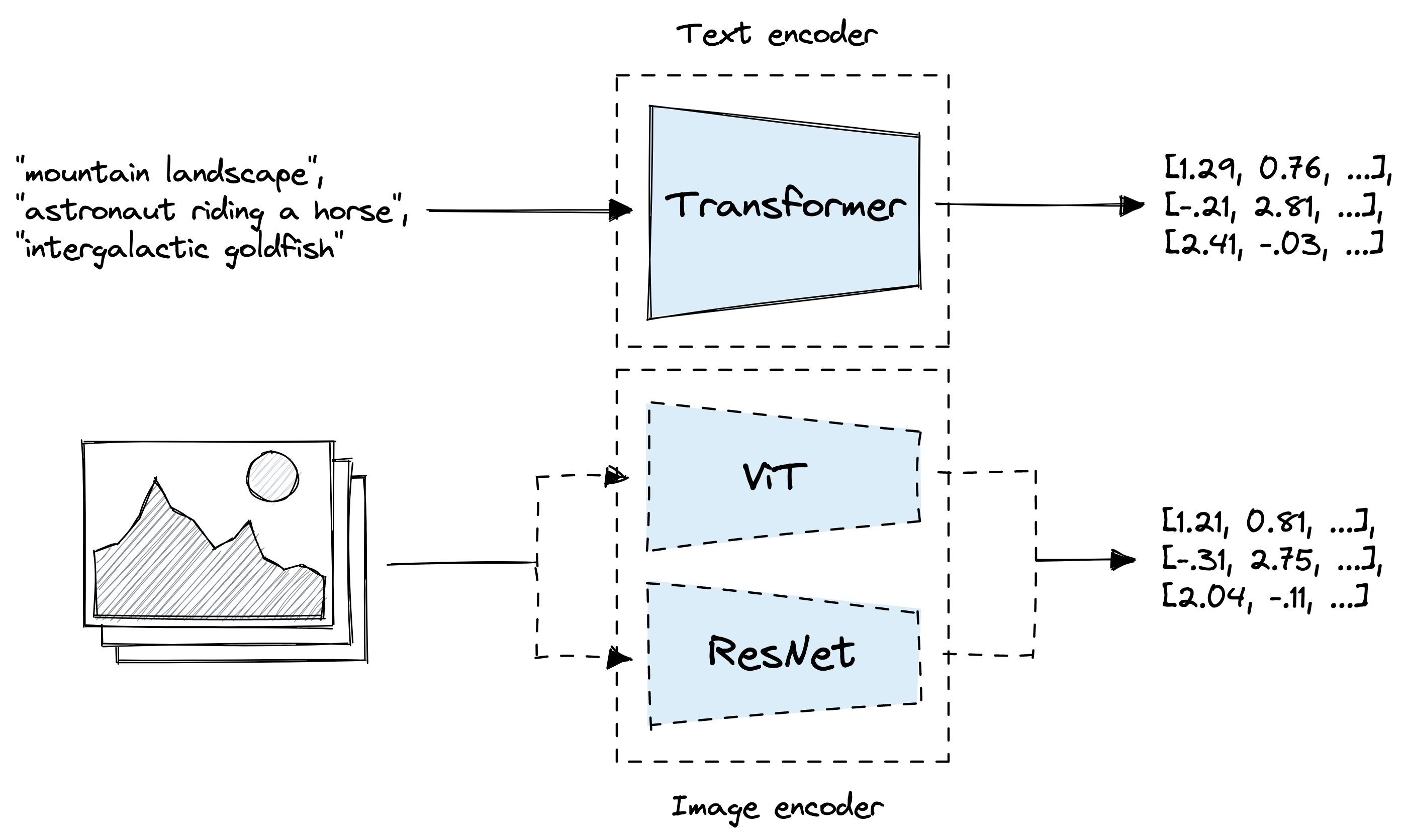

CLIP Itself is a Strong Fine-tuner: Achieving 85.7% and 88.0% Top-1 Accuracy with ViT-B and ViT-L on ImageNet – arXiv Vanity